Edge AI: what, why and how with open source

Edge AI is transforming the way that devices interact with data centres, challenging organisations to stay up to speed with the latest innovations. From AI-powered healthcare instruments to autonomous vehicles, there are plenty of use cases that benefit from artificial intelligence on edge computing. This blog will dive into the topic, capturing key considerations when starting an edge AI project, main benefits, challenges and how open source fits into the picture.

What is Edge AI?

AI at the edge, or Edge AI, refers to the combination of artificial intelligence and edge computing. It aims to execute machine learning models on interconnected edge devices. It enables devices to make smarter decisions, without always connecting to the cloud to process the data. It is called edge, because the machine learning model runs near the user rather than in a data centre.

Edge AI is growing in popularity as industries identify new use cases and opportunities to optimise their workflows, automate business processes or unlock new chances to innovate. Self-driving cars, wearable devices, security cameras, and smart home appliances are among the technologies that take advantage of edge AI capabilities to deliver information to users in real-time when it is most essential.

Benefits of edge AI

Nowadays, algorithms are capable of understanding different tasks such as text, sound or images. They are particularly useful in places occupied by end users with real-world problems. These AI applications would be impractical or even impossible to deploy in a centralised cloud or enterprise data centre due to issues related to latency, bandwidth and privacy.

Some of the most important benefits of edge AI are:

- Real time insights: Since data is analysed real time, close to the user, edge AI enables real time processing and reduces the time needed to complete activities and derive insight.

- Cost savings: Depending on the use case, some data can often be processed at the edge where it is collected, so it doesn’t all have to be sent to the data centre for training the machine learning algorithms. This reduces the cost of storing the data, as well as training the model. At the same time, organisations often utilise edge AI to reduce the power consumption of the edge devices, by optimising the time they are on and off, which again leads to cost reduction.

- High availability: Having a decentralised way of training and running the model enables organisations to ensure that their edge devices benefit from the model even if there is a problem within the data centre.

- Privacy: Edge AI can analyse data in real time without exposing it to humans, increasing the privacy of appearance, voice or identity of the objects involved. For example, surveillance cameras do not need someone to look at them, but rather have machine learning models that send alerts depending on the use case or need.

- Sustainability: Using edge AI to reduce the power consumption of edge devices doesn’t just minimise costs, it also enables organisations to become more sustainable. With edge AI, enterprises can avoid utilising their devices unless they are needed.

Use cases in the industrial sector

Across verticals, enterprises are quickly developing and deploying edge AI models to address a wide variety of use cases. To get a better sense of the value that edge AI can deliver, let’s take a closer look at how it is being used in the industrial sector.

Industrial manufacturers struggle with large facilities that often use a significant number of devices. A survey fielded in the spring of 2023 by Arm found that edge computing and machine learning were among the top five technologies that will have the most impact on manufacturing in the coming years. Edge AI use cases are often tied to the modernisation of existing manufacturing factories. They include production scheduling, quality inspection, and asset maintenance – but applications go beyond that. Their main objective is to improve the efficiency and speed of automation tasks like product assembly and quality control.

Some of the most prominent use cases of Edge AI in manufacturing include:

- Real-time detection of defects as part of quality inspection processes that use deep neural networks for analysing product images. Often, this also enables predictive maintenance, helping manufacturers minimise the need to reactively fix their components by instead addressing potential issues preemptively.

- Execution of real-time production assembly tasks based on low-latency operations of industrial robots.

- Remote support of technicians on field tasks based on augmented reality (AR) and mixed reality (MR) devices;

Low latency is the primary driver of edge AI in the industrial sector. However, some use cases also benefit from improved security and privacy. For example, 3D printers3d printers can use edge AI to protect intellectual property through a centralised cloud infrastructure.

Best practices for edge AI

Compared to other kinds of AI projects, running AI at the edge comes with a unique set of challenges. To maximise the value of edge AI and avoid common pitfalls, we recommend following these best practices:

- Edge device: At the heart of Edge AI are the devices which end up running the models. They all have different architectures, features and dependencies. Ensure that the capabilities of your hardware align with the requirements of your AI model, and ensure that the software – such as the operating system – is certified on the edge device..

- Security: Both in the data centres and on the edge devices there are artefacts that could compromise the security of an organisation. Whether we talk about the data used for training, the ML infrastructure used for developing or deploying the ML model, or the operating system of the edge device, organisations need to protect all these artefacts. Take advantage of the appropriate security capabilities to safeguard these components, such as secure packages, secure boot of the OS from the edge device, or full-disk encryption on the device.

- Machine learning size: Depending on the use case, the size of the machine learning model is different. It needs to fit on the end device that it is intended to run, so developers need to optimise the model size dictate the chances to successfully deploying it.

- Network connection: The machine learning lifecycle is an iterative process, so models need to be periodically updated. Therefore, the network connection influences both the data collection process as well as the model deployment capabilities. Organisations need to check and ensure there is a reliable network connection before building deploying models or building an AI strategy.

- Latency: Organisations often use edge AI for real-time processing, so the latency needs to be minimal. For example, retailers need instant alerts when fraud is detected and cannot ask customers to wait at the cashiers for minutes before confirming payment. Depending on the use case, latency needs to be assessed and considered when choosing the tooling and model update cadence.

- Scalability: Scale is often limited to the cloud bandwidth to move and process information. It leads to high costs. To ensure a broader range of scalability, the data collection and part of the data processing should happen at the edge.

- Remote management: Organisations often have multiple devices or multiple remote locations, so scaling to all of them brings new challenges related to their management. To address these challenges, ensure that you have mechanisms in place for easy, remote provisioning and automated updates.

Edge AI with open source

Open source is at the centre of the artificial intelligence revolution, and open source solutions can provide an effective path to addressing many of the best practices described above. When it comes to edge devices, open source technology can be used to ensure the security, robustness and reliability of both the device and machine learning model. It gives organisations the flexibility to choose from a wide spectrum of tools and technologies, benefit from community support and quickly get started without a huge investment. Open source tooling is available across all layers of the stack, from the operating system that runs on the edge device, to the MLOps platform used for training, to the frameworks used to deploy the machine learning model.

Edge AI with Canonical

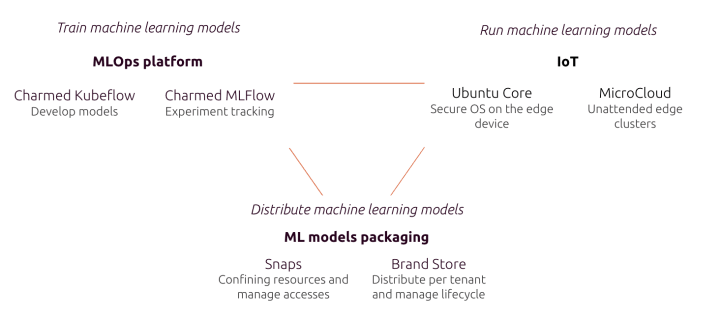

Canonical delivers a comprehensive AI stack with all the open source software organisations need for their edge AI projects.

Canonical offers an end-to-end MLOps solution that enables you to train your models. Charmed Kubeflow is the foundation of the solution, and it is seamlessly integrated with leading open source tooling such as MLflow for model registry or Spark for data streaming. It gives organisations flexibility to develop their models on any cloud platform and any Kubernetes distribution, offering capabilities such as user management, security maintenance of the used packages or managed services.

The operating system that the device runs plays an important role. Ubuntu Core is the distribution of the open source Ubuntu operating system dedicated to IoT devices. It has capabilities such as secure boot and full disk encryption to ensure the security of the device. For certain use cases, running a small cloud, such as Microcloud enables unattended edge clusters to leverage machine learning.

Packaging models as snaps makes them easy to maintain and update in production. Snaps offer a variety of benefits including OTA updates, auto rollback in case of failure and no touch deployment. At the same time, for managing the lifecycle of the machine learning of the model and the remote management, brand stores are an ideal solution..

To learn more about Canonical’s edge AI solutions, get in touch.

Further reading

5 Edge Computing Examples You Should Know